前言

一个用 JS 写的人脸识别库,项目链接:

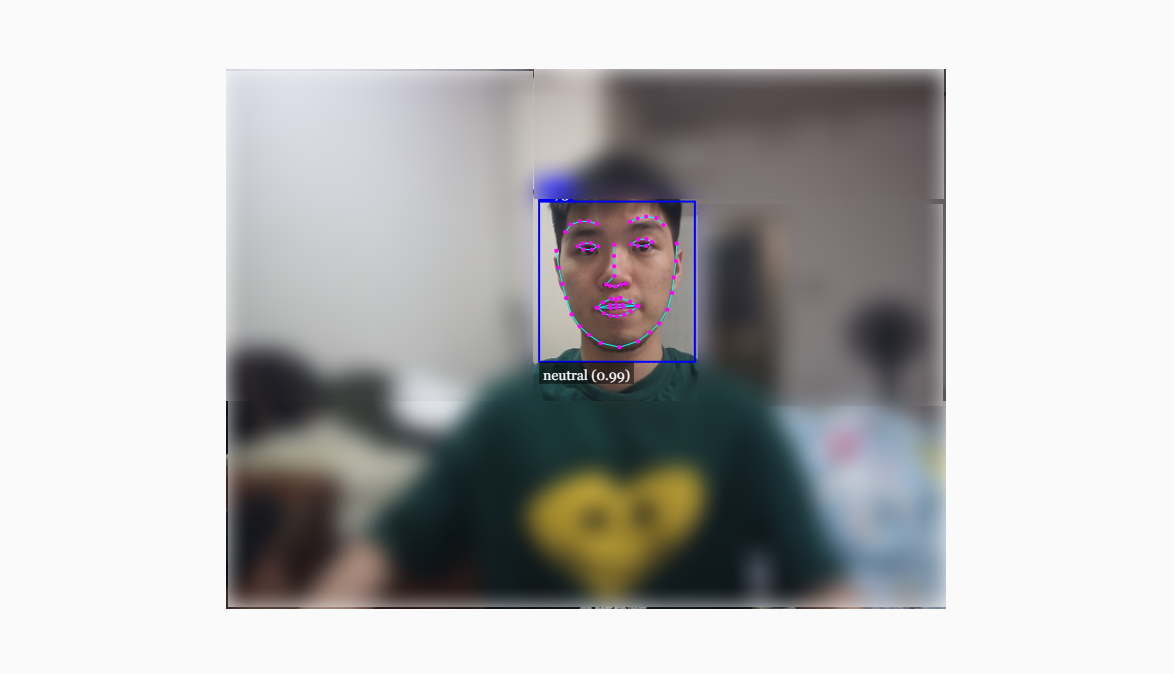

实例

跟着大佬实现一下:

app.js

| const video = document.getElementById("video"); // 声明 video 变量与 video 标签绑定 | |

| const startVideo = () => { | |

| navigator.getUserMedia( | |

| { video: {} }, | |

| (stream) => (video.srcObject = stream), | |

| (err) => console.log(err) | |

| ); | |

| }; | |

| Promise.all([ | |

| faceapi.nets.tinyFaceDetector.loadFromUri("./models"), | |

| faceapi.nets.faceLandmark68Net.loadFromUri("./models"), | |

| faceapi.nets.faceRecognitionNet.loadFromUri("./models"), | |

| faceapi.nets.faceExpressionNet.loadFromUri("./models"), | |

| faceapi.nets.faceExpressionNet.loadFromUri("./models"), | |

| ]).then(startVideo()); // 等模型加载完再播放视频 | |

| video.addEventListener("play", () => { | |

| const canvas = faceapi.createCanvasFromMedia(video); | |

| document.body.append(canvas); | |

| const displaySize = { | |

| width: video.width, | |

| height: video.height, | |

| }; | |

| faceapi.matchDimensions(canvas, displaySize); | |

| setInterval(async () => { | |

| const detections = await faceapi | |

| .detectAllFaces(video, new faceapi.TinyFaceDetectorOptions()) | |

| .withFaceLandmarks() | |

| .withFaceExpressions(); | |

| // console.log(detections); | |

| const resizedDetecitons = faceapi.resizeResults(detections, displaySize); | |

| canvas.getContext("2d").clearRect(0, 0, canvas.width, canvas.height); // 每次画新的之前去掉旧的 | |

| faceapi.draw.drawDetections(canvas, resizedDetecitons); | |

| faceapi.draw.drawFaceLandmarks(canvas, resizedDetecitons); | |

| faceapi.draw.drawFaceExpressions(canvas, resizedDetecitons); | |

| }, 100); //每隔 100 毫秒得到一个数组,数组里面有多少个对象取决于有多少张人脸 | |

| }); |

index.html

| <!DOCTYPE html> | |

| <html lang="en"> | |

| <head> | |

| <meta charset="UTF-8"> | |

| <meta http-equiv="X-UA-Compatible" content="IE=edge"> | |

| <meta name="viewport" content="width=device-width, initial-scale=1.0"> | |

| <title>Face-Test-Issactan</title> | |

| <link rel="shortcut icon" href="https://cdn.jsdelivr.net/gh/Tan35/ImgHosting/TanPIC2/faciapi-js-tets.png"> | |

| </head> | |

| <style> | |

| body { | |

| margin: 0; | |

| padding: 0; | |

| width: 100vw; | |

| height: 100vh; | |

| display: flex; | |

| justify-content: center; | |

| align-items: center; | |

| background-color: #fafafa; | |

| } | |

| canvas{ | |

| position: absolute; | |

| } | |

| </style> | |

| <body> | |

| <video id="video" width="720" height="560" autoplay muted></video> | |

| <script src="face-api.min.js"></script> | |

| <script src="app.js"></script> | |

| </body> | |

| </body> | |

| </html> |

另外需要下载:

face-api.min.js, 在index.html中先引用,再引用编写的app.js;- 新建文件夹,这里假设名为

models,先下载整个项目,然后添加/weights/所有文件到models中,这里其实就是作者预先训练好的模型。

运行

- 可以直接在 VScode 中 借用 LiveServer 打开

index.html运行,也可以直接打开文件,首次会请求允许打开摄像头; - 我后来也部署到了 Vercel 上,示例:🔗 face-Test-IssacTan。