2024-5-15 已更新数据集链接,见文末尾。

前言

在复现代码的使用,尝试使用 torchvision 库下的 Standford Cars 数据集做实验,代码调用如下:

import torch

import torchvision

stanford_cars_data = torchvision.datasets.StanfordCars(root=".", download=True)

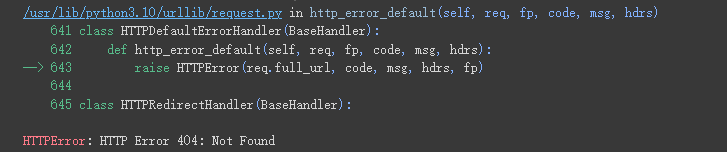

报错,返回如下结果:

解决

在 torchvision.datasets.StanfordCars(root=".", download=True) 方法中,download 参数选择为 True,则从网上直接下载该数据集,现在报了 404,第一反应找到该数据集在哪里下载的,直接访问查看是否该页面失效。那么就应该去找 torchvision.datasets.StanfordCars 的源码,按住 ctrl 跳转源码页面或者直接寻找系统上 Anaconda 目录下的此路径:/.../anaconda3/envs/xxx/lib/python3.x/site-packages/torchvision/datasets/,然后找到 standford_cars.py,又或者在这里直接查看 Pytorch 提供的【😎源码】。下面直接贴上该源码:

import pathlib

from typing import Callable, Optional, Any, Tuple

from PIL import Image

from .utils import download_and_extract_archive, download_url, verify_str_arg

from .vision import VisionDataset

class StanfordCars(VisionDataset):

"""`Stanford Cars <https://ai.stanford.edu/~jkrause/cars/car_dataset.html>`_ Dataset

The Cars dataset contains 16,185 images of 196 classes of cars. The data is

split into 8,144 training images and 8,041 testing images, where each class

has been split roughly in a 50-50 split

.. note::

This class needs `scipy <https://docs.scipy.org/doc/>`_ to load target files from `.mat` format.

Args:

root (string): Root directory of dataset

split (string, optional): The dataset split, supports ``"train"`` (default) or ``"test"``.

transform (callable, optional): A function/transform that takes in an PIL image

and returns a transformed version. E.g, ``transforms.RandomCrop``

target_transform (callable, optional): A function/transform that takes in the

target and transforms it.

download (bool, optional): If True, downloads the dataset from the internet and

puts it in root directory. If dataset is already downloaded, it is not

downloaded again."""

def __init__(

self,

root: str,

split: str = "train",

transform: Optional[Callable] = None,

target_transform: Optional[Callable] = None,

download: bool = False,

) -> None:

try:

import scipy.io as sio

except ImportError:

raise RuntimeError("Scipy is not found. This dataset needs to have scipy installed: pip install scipy")

super().__init__(root, transform=transform, target_transform=target_transform)

self._split = verify_str_arg(split, "split", ("train", "test"))

self._base_folder = pathlib.Path(root) / "stanford_cars"

devkit = self._base_folder / "devkit"

if self._split == "train":

self._annotations_mat_path = devkit / "cars_train_annos.mat"

self._images_base_path = self._base_folder / "cars_train"

else:

self._annotations_mat_path = self._base_folder / "cars_test_annos_withlabels.mat"

self._images_base_path = self._base_folder / "cars_test"

if download:

self.download()

if not self._check_exists():

raise RuntimeError("Dataset not found. You can use download=True to download it")

self._samples = [

(

str(self._images_base_path / annotation["fname"]),

annotation["class"] - 1, # Original target mapping starts from 1, hence -1

)

for annotation in sio.loadmat(self._annotations_mat_path, squeeze_me=True)["annotations"]

]

self.classes = sio.loadmat(str(devkit / "cars_meta.mat"), squeeze_me=True)["class_names"].tolist()

self.class_to_idx = {cls: i for i, cls in enumerate(self.classes)}

def __len__(self) -> int:

return len(self._samples)

def __getitem__(self, idx: int) -> Tuple[Any, Any]:

"""Returns pil_image and class_id for given index"""

image_path, target = self._samples[idx]

pil_image = Image.open(image_path).convert("RGB")

if self.transform is not None:

pil_image = self.transform(pil_image)

if self.target_transform is not None:

target = self.target_transform(target)

return pil_image, target

def download(self) -> None:

if self._check_exists():

return

download_and_extract_archive(

url="https://ai.stanford.edu/~jkrause/cars/car_devkit.tgz",

download_root=str(self._base_folder),

md5="c3b158d763b6e2245038c8ad08e45376",

)

if self._split == "train":

download_and_extract_archive(

url="https://ai.stanford.edu/~jkrause/car196/cars_train.tgz",

download_root=str(self._base_folder),

md5="065e5b463ae28d29e77c1b4b166cfe61",

)

else:

download_and_extract_archive(

url="https://ai.stanford.edu/~jkrause/car196/cars_test.tgz",

download_root=str(self._base_folder),

md5="4ce7ebf6a94d07f1952d94dd34c4d501",

)

download_url(

url="https://ai.stanford.edu/~jkrause/car196/cars_test_annos_withlabels.mat",

root=str(self._base_folder),

md5="b0a2b23655a3edd16d84508592a98d10",

)

def _check_exists(self) -> bool:

if not (self._base_folder / "devkit").is_dir():

return False

return self._annotations_mat_path.exists() and self._images_base_path.is_dir()

上面高亮的代码中,也就是对应着 Standford Cars 数据集下载地址:🔗,打开果然“删库跑路”了,怪不得报 404 的错误。

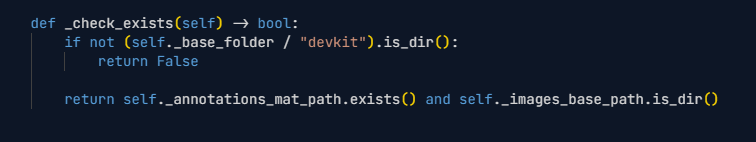

也就是 download=True 该选项无法再选择,只能使用 download=False。继续看上面的代码可知,选择 download=False 之后,会检测是否有已下载的数据,其中要求:

_base_folder 指的是 "standford_cars" 文件夹;annotations_mat_path 、_images_base_path在 torchvision.datasets.StanfordCars 方法中的 split 参数不指定时指的是 "devkit"下的 "cars_test_annos_withlabels.mat" 和 "cars_test"。

所以这段代码会检查意味着当路径 self._base_folder / "devkit" 是一个文件夹,并且路径 self._annotations_mat_path 指向的文件存在,并且路径 self._images_base_path 是一个文件夹时,该方法返回 True,否则返回 False。也就是满足上述条件才算自检成功,才能成功使用 download=False 这个参数,否则会报找不到数据集的错误,所以我们上传的数据集应该满足这样的文件树顺序:

└── stanford_cars

└── cars_test_annos_withlabels.mat

└── cars_train

└── xxx.jpg

└── xxx.jpg

└── ...

└── cars_test

└── xxx.jpg

└── xxx.jpg

└── ...

└── devkit

├── cars_meta.mat

├── cars_test_annos.mat

├── cars_train_annos.mat

├── eval_train.m

├── README.txt

└── train_perfect_preds.txt

数据集备份

以上所述要满足的文件树要求基于 stanford_cars_data = torchvision.datasets.StanfordCars(root=".", download=True) 这句代码中的 root="." 值,也就是 root 的位置,standford_cars.py 就会在该位置去对应数据集的文件树是否正确。

所以只要把上面的数据集放在使用 root 中的位置即可,如果为 "." 则为和使用该方法的代码同一目录下即可。我在网上找了数据集并上传到奶牛快传(奶牛快传政策改变,上传空间有限) ,百度网盘,做了备份,有需要的朋友自取,永久有效。

- BaiduYun👏 提取码:1pnm